E-Pluribus | December 9, 2022

Government and Big Tech versus free speech; how Twitter is exposing media and government misinformation; and algorithms get a bad rap.

A round-up of the latest and best writing and musings on the rise of illiberalism in the public discourse:

Ilya Shapiro: Government–Tech Collusion Threatens Free Speech

Free speech can be seen as both a Constitutional issue, limiting what government can do, as well as one of culture, which has more to do with the willingness of citizens to live and interact in a pluralistic society. Ilya Shapiro at City Journal writes about a case where these two elements intersect: how government sometimes uses indirect influence to suppress speech.

The Manhattan Institute, together with the Institute for Free Speech, has filed an amicus brief supporting the Changizi plaintiffs before the U.S. Court of Appeals for the Sixth Circuit. We argue that state action exists, and thus constitutional scrutiny attaches, when government threats—some not-so-loosely veiled—affect the decisions of private entities such that their actions effectively become those of the state. The same state action occurs when, in lieu of coercion, the government colludes with private actors.

We face untold regulatory challenges in adapting to the digital age, particularly with the explosion of social-media platforms as forums for expressing political ideas, but there’s little difference between twenty-first-century censorship and that which came before. Censorship by a government-coerced or -induced or -collaborating agent violates the First Amendment no less than an official order to “stop the presses.”

Alas, the district court in Changizi v. HHS didn’t appreciate the all-too-real prospect that government can drive social-media censorship. But the plaintiffs are entitled to prove that this possibility in fact came to fruition: by many accounts, numerous tech platforms followed the government’s direction and began heavily censoring or even banning users for their allegedly misleading posts about the pandemic. Indeed, recent reporting confirms that federal agencies colluded with and coerced platforms to suppress Covid-related “misinformation.”

The government justifies its speech suppression by calling it a fight against “domestic terrorism,” but the posts at issue are either factually accurate or simply state disagreements with government policy—and in any case, “fake news” can also be protected speech. The plaintiffs seek to hold the government liable for its transgressions.

Read it all.

Holman W. Jenkins, Jr.: Twitter and Disinformation Wars

As Elon Musk continues to reveal Twitter’s internal files on how it has dealt with alleged misinformation, Holman Jenkins of The Wall Street Journal says the media has a lot of introspection to do. Rather than holding the government accountable for its part in the disinformation wars, the media too often sides with the government for political reasons, exacerbating problems that the press is supposed to bring into the light.

Disinformation doesn’t have to be persuasive. It only has to confuse. In 1941 how did Stalin miss 151 divisions massing on his border? He didn’t. He was swamped with intelligence saying the Germans were about to invade—and also intelligence that the Germans thought Stalin was about to invade, and intelligence that the Germans were trying to trick Stalin into invading.

This is your model of how disinformation operated in the laptop smokescreen too, which wasn’t even slightly credible to anybody who thought about it for a moment. But it worked and now will come the deluge. The technological moment guarantees it. The sudden, dramatic increase in the geopolitical stakes guarantees it. Our information environment will fill with the disinformation of intelligence agencies, ours included, which won’t be able to leave these opportunities alone.

The alleged Russian meddling of 2016 was already a drop in the ocean compared with the flogging of Russian meddling by domestic agents trying to influence our politics. This column got interested in UFOs for one reason: the intelligence community report of June 25, 2021, when officials with access to classified information told us what they might believe about UFOs if they didn’t have access to classified information, a situation that can only lead to mischief, and has, which smarter officials, especially at NASA, are trying to fix.

Our media needs to up its own game; from personal knowledge, public servants involved in exposing FBI misdeeds during the 2016-19 era and who are by no means Trumpistas are nevertheless appalled by the media’s refusal to acknowledge reality, and rightly so.

Read it all here.

Elizabeth Nolan Brown: In Defense of Algorithms

When they come for the algorithms, Elizabeth Nolan Brown will be able to say she spoke out. Writing at Reason, Brown says algorithms actually are beneficial in a number of ways and furthermore, aren’t as responsible for as many negative things as conventional wisdom suggests.

For the average person online, algorithms do a lot of good. They help us get recommendations tailored to our tastes, save time while shopping online, learn about films and music we might not otherwise be exposed to, avoid email spam, keep up with the biggest news from friends and family, and be exposed to opinions we might not otherwise hear.

So why are people also willing to believe the worst about them?

[ . . . ]

To some extent, the arguments about algorithms are just a new front in the war over free speech. It's not surprising that algorithms, and the platforms they help curate, upset a lot of people. Free speech upsets people, censorship upsets people, and political arguments upset people.

But the war on algorithms is also a way of avoiding looking in the mirror. If algorithms are driving political chaos, we don't have to look at the deeper rot in our democratic systems. If algorithms are driving hate and paranoia, we don't have to grapple with the fact that racism, misogyny, antisemitism, and false beliefs never faded as much as we thought they had. If the algorithms are causing our troubles, we can pass laws to fix the algorithms. If algorithms are the problem, we don't have to fix ourselves.

Blaming algorithms allows us to avoid a harder truth. It's not some mysterious machine mischief that's doing all of this. It's people, in all our messy human glory and misery. Algorithms sort for engagement, which means they sort for what moves us, what motivates us to act and react, what generates interest and attention. Algorithms reflect our passions and predilections back at us.

Read the whole thing.

Around Twitter

Here’s the beginning of a longer thread from the Executive Director or Heterodox Academy on his mission to improve higher education:

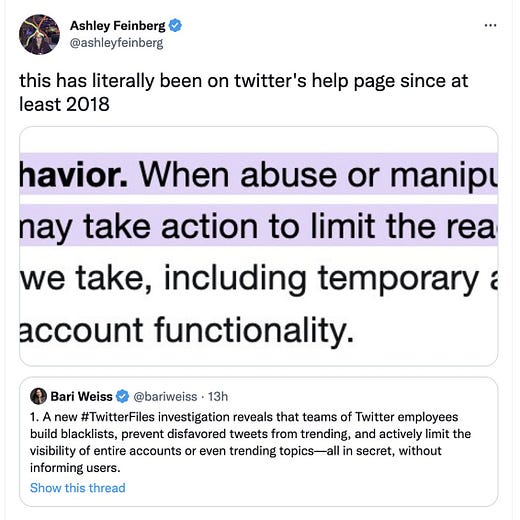

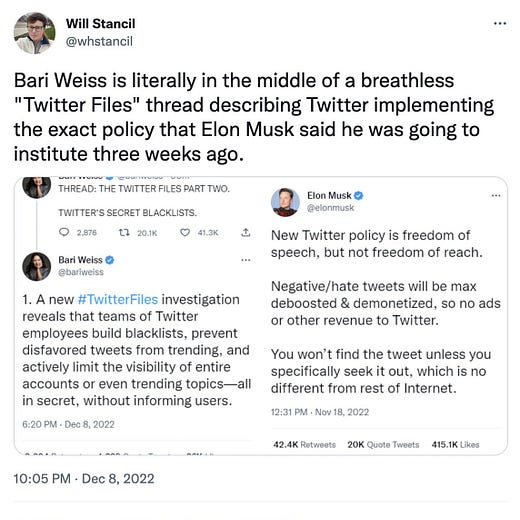

From Glenn Greenwald, how the previous management team of Twitter covered up “shadowbanning;” or, as former Head of Trust & Safety at Twitter Yoel Roth called it, “visibility filtering” (see below):

And finally, an announcement this week from Bari Weiss and her Substack Common Sense. Introducing The Free Press: